What’s Real in AI Testing Today

How AI is beginning to remove the most expensive manual work in testing—and what that means for Quality Engineering.

🏁 TL;DR

AI in testing is moving from acceleration to elimination of manual effort in scripting and maintenance.

This shift opens new opportunities in coverage improvement and legacy migration.

The biggest blockers are still data quality, context drift, and governance.

The most successful organizations measure effort removed and trust established, not just execution speed.

Everyone says they’re “using AI in testing.”

Almost no one can explain what that actually means.

Executives are under pressure to show AI progress. Vendors are racing to add AI labels. QA teams are running pilots without measurable ROI.

The truth is that AI is already changing testing — but not by reimagining it from scratch. It’s quietly removing the manual effort that made automation expensive in the first place.

In my recent 3-part series, we explored the transformative vision of Agentic AI. Now, let’s ground that future in today’s reality. While every vendor is talking about AI, here are the five real patterns of adoption happening right now.

The Cost Problem AI Is Actually Solving

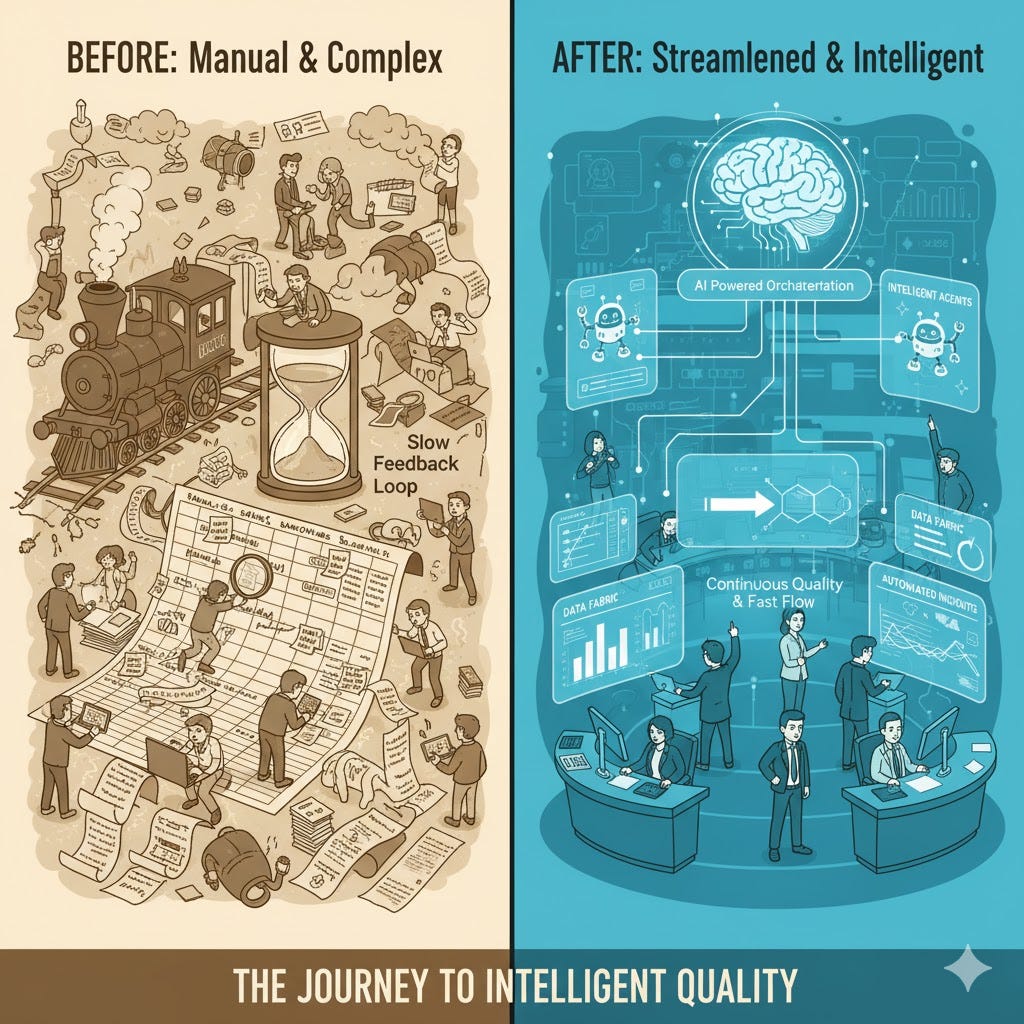

Before AI, the economics of automation were dominated by one unavoidable reality: creating and maintaining scripts was manual, expensive, and endless.

Even organizations with mature frameworks struggled to escape the maintenance trap. Every change in UI, data, or logic broke something, and fixing it meant more human rework.

According to multiple analyst studies — including the World Quality Report and Forrester’s State of Continuous Testing — enterprises spend 40 to 60 percent of total QA effort simply maintaining existing scripts, not creating new coverage.

That maintenance debt is what AI came for. Not to replace testers, but to remove repetitive human effort that drains time, talent, and budget.

Early AI Adoption: The “Horseless Carriage” Phase

AI in testing today looks a lot like the early days of the automobile.

The first cars were called horseless carriages because engineers simply bolted engines onto buggies instead of rethinking how vehicles should work.

We’re doing the same thing in testing.

AI is being layered on top of legacy automation pipelines instead of designing entirely new ones.

That’s a necessary first step — and it’s why so many executives are unsure whether AI is truly transformative or just another productivity layer.

Five Patterns Defining AI in Testing (2025)

Across hundreds of pilots and tool experiments, five real adoption patterns are emerging. Each represents a step in how AI is being applied to testing today. Some are incremental. One is transformational.

1. AI Copilots for Test Design

Large language models are now assisting testers in creating test cases and automation scripts.

They speed up authoring and make design more accessible across roles, which reduces reliance on a few automation specialists.

However, these copilots still struggle to maintain full business context. They often produce inconsistent coverage or redundant cases that require human review.

2. Test Case Mining

AI can now extract test scenarios from Jira stories, requirement documents, and past test runs.

This improves traceability and reuse, especially in large backlogs where manual tagging has fallen behind.

But the “garbage in, garbage out” problem is real. Test assets and user stories are frequently inconsistent, outdated, or poorly structured. The result is noisy, incomplete, or misleading outputs.

3. Self-Healing Automation

AI models are being trained to repair broken locators, element paths, or scripts during execution.

This reduces flakiness and the day-to-day maintenance work that once consumed entire sprints.

Self-healing, however, is still mostly reactive. It works best in predictable, UI-heavy systems and struggles with dynamic or deeply integrated workflows.

4. AI-Augmented Analytics and Triage

Machine learning models are clustering failures, predicting defect hotspots, and highlighting high-risk modules before release.

This shortens feedback loops and gives engineering teams clearer visibility into where risk is building up.

The limitation is data depth. These systems depend on consistent telemetry, clean logs, and historical trend data that many organizations still lack.

5. AI-Generated Test Assets

This is the most significant breakthrough so far.

AI can now capture manual test executions, learn the intent behind them, and automatically generate reusable, self-maintaining automation scripts.

By removing the human effort of authoring and maintaining scripts, these systems address one of the most expensive bottlenecks in Quality Engineering.

They still require governance, explainability, and human validation, but they mark the first real step toward autonomous test generation.

The Quiet Breakthrough: Removing Manual Effort

Pattern #5 marks the most meaningful shift so far.

For the first time, AI is not just accelerating testing — it’s removing the need for humans to create and maintain scripts altogether.

New AI-driven tools can observe manual test executions, learn the underlying logic, and automatically generate reusable automation scripts.

They then keep those scripts in sync with the application, adapting as elements or workflows change.

This removes two of the most costly steps in traditional QA:

Creating automation scripts from scratch, and

Maintaining them whenever the app changes.

The impact is enormous. Teams no longer spend entire sprints fixing test suites.

Automation engineers can focus on test strategy and risk coverage instead of syntax and locators.

And this shift opens up use cases that weren’t possible before:

1. Coverage Improvement

By capturing real user journeys in production or UAT environments, AI can compare them with QA coverage and identify where testing misses the scenarios that matter most.

For the first time, teams can measure test coverage based on actual user behavior, not assumptions.

2. Legacy Migration

Many enterprises remain locked into legacy automation frameworks that are no longer evolving or have no AI roadmap.

With AI now able to execute legacy tests and generate modern equivalents, organizations can migrate to unified, intelligent testing platforms without starting from zero.

Removing manual scripting is the first genuine economic disruption in testing since automation itself.

The Remaining Challenges

AI has momentum, but it still faces practical limitations that every organization must solve before scaling enterprise-wide.

Data readiness. Most teams lack the structured, labeled test data needed for consistent AI learning.

Context drift. Models misinterpret evolving business logic or lose context across releases.

Governance debt. Enterprises are still defining accountability.

If an AI-generated test misses a critical defect, who is responsible — the model, the data it was trained on, or the human who approved it?

Without clear accountability frameworks, risk silently accumulates.

Explainability. Teams need to understand why the AI decided to create, skip, or repair a test, especially in regulated industries.

AI will not replace testing governance — it will make governance even more important.

The Executive View: What This Phase Really Means

Executives should see this moment for what it is: a transition from manual acceleration to autonomous generation.

AI in testing is no longer a theory. It’s running in production. But it’s uneven, immature, and still dependent on human oversight.

The most advanced teams share three characteristics:

They measure manual effort removed, not just automation speed gained.

They integrate human validation and audit checkpoints into every AI-generated artifact.

They treat automation assets as living systems that learn and improve, not static deliverables.

This is the new definition of testing maturity — not how much you automate, but how intelligently you govern what automation creates.

The Real Transformation Has Begun

AI in testing isn’t about doing the same work faster.

It’s about removing the work that made testing expensive — script authoring, maintenance, and triage.

The tester’s role is shifting from execution to oversight, from writing scripts to managing systems of intelligence.

This is not the end of testing. It’s the start of something smarter, faster, and far more scalable.

The next generation of Quality Engineering will belong to leaders who design for that reality now.