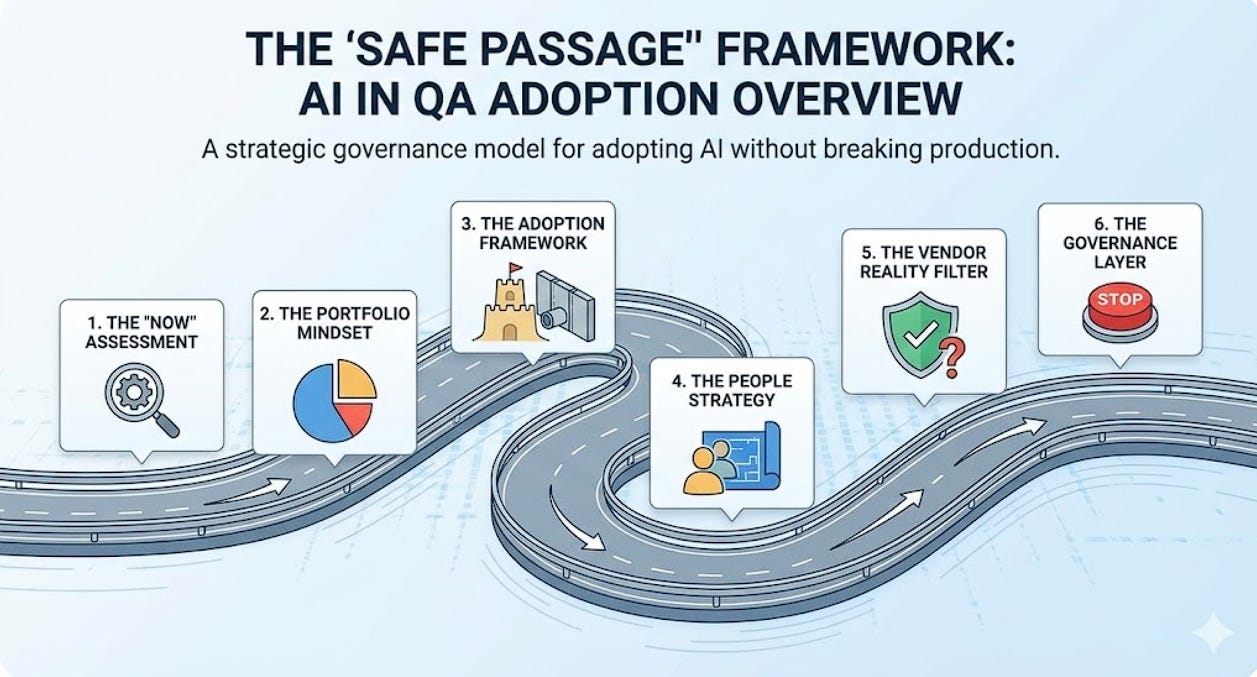

The “Safe Passage” Framework: How to Adopt AI in QA Without Breaking Production

We don’t need a crystal ball for the future of testing. We need a governance model for the chaos of today.

Every QA Director I talk to is currently stuck in the “Anxiety of Relevance.”

We are trapped between two opposing forces. Force A (The Market/CEO): “Innovate faster. Adopt GenAI. Why are we moving slower than the competition?” Force B ( The Reality/CTO): “Do not break the pipeline. Do not release bugs. Stability is paramount.”

It feels impossible to satisfy both. If you move fast with unproven AI tools, you risk destabilizing your release cadence. If you prioritize safety and ignore AI, you risk your team becoming obsolete.

The mistake we are making is trying to solve this tension with tools. We are hunting for the “perfect AI platform” that is both revolutionary and 100% safe. Newsflash: It doesn’t exist yet.

The solution isn’t a tool. It’s a Governance Framework.

We need a system that allows us to absorb innovation constantly without jeopardizing our current delivery commitments. I call this the “Safe Passage” Framework.

Here is the expanded blueprint for building an adaptive, AI-ready QA organization, including the exact steps to take next week.

1. The “Now” Assessment: The Toil Audit

The Mental Model: Differentiate “Cool” from “Crucial.”

There is a massive gap between “Demoware” (what vendors show you on a webinar) and “Production-Ready” (what actually works in your messy architecture). If you chase every shiny object, you will burn out your team.

We need to stop looking for “Autonomous Testing” (which isn’t fully here yet) and start optimizing for “Assisted Engineering.”

The Strategy: Ignore the “Future of QA” for a moment. Look at the “Present of Pain.” AI is currently an incredible force multiplier for drudgery. It is terrible at intuition, but it is excellent at boilerplate.

Director’s Action (Monday Morning):

Run a “Toil Audit”: Don’t ask your team “What AI tools do you want?” Ask them: “What are the top 3 tasks that made you hate your job last week?”

Target the Data: Usually, the answer is “Waiting for Test Data” or “Fixing flaky selectors.”

The “One Tool” Rule: Pick one specific category of toil. Find an AI tool that solves that. Ignore everything else for Q1.

The Metric: Measure “Hours returned to the engineer,” not “Tests created.”

2. The Portfolio Mindset: Managing Risk

The Mental Model: Treat your Test Suite like an Investment Portfolio.

A financial advisor would never tell you to put 100% of your money into a volatile crypto coin. Yet, we often try to “migrate” our entire testing strategy to a new AI tool at once. That is suicide.

Adopt the 70/20/10 Rule for your QA Portfolio:

70% Conservative (The Core): Your existing Selenium/Playwright/Cypress suites. The boring, reliable stuff that protects the revenue. Do not touch this yet.

20% Moderate (The Optimization): AI tools that “assist” the core (e.g., Self-healing plugins, AI-generated unit tests).

10% Aggressive (The Moonshots): Pure “Agentic AI” that explores the app without scripts. This is high risk, high reward.

Director’s Action:

Map Your Tools: Draw a circle. Is 100% of your effort in “Conservative”? You are falling behind. Is >50% in “Moonshots”? You are about to break production.

Rebalance: explicitly allocate budget and time to the 10% bucket. If you don’t budget for R&D, R&D won’t happen.

3. The Adoption Framework: The “Sandbox Protocol”

The Mental Model: The Air Gap.

How do you let your team experiment with wild AI agents without risking a P1 incident? You must firewall the innovation. You need a formalized “Intake Process” for new tech.

The “Shadow Mode” Pipeline:

The Sandbox: New tools run here first against dummy data. No connection to prod.

Shadow Mode: If a tool graduates from the sandbox, it runs in your CI/CD pipeline in “Shadow Mode.” It executes tests, logs results, and consumes resources, but it cannot fail the build. It is invisible to the developers.

The Value Gate: The AI suite must run in Shadow Mode for 3 consecutive sprints. We compare its results to the legacy suite.

Director’s Action:

Set the Exit Criteria: Before you install a tool, write down the number. “This tool only graduates to production if its False Positive Rate is < 5%.”

The Parallel Run: Challenge your Senior Architect to set up a parallel pipeline job for the new tool by Wednesday.

4. The People Strategy: From Authors to Architects

The Mental Model: The Junior Engineer Analogy.

There is real anxiety in the market. “Agentic AI” looks like it does the job of a tester. If you ignore this fear, your team will resist the change. If you are too prescriptive (”You must learn prompt engineering!”), you will exhaust them.

The Pivot: Explain to your team that AI is the most productive Junior Engineer they will ever hire.

It is fast.

It is eager.

It hallucinates.

It doesn’t understand “User Experience.”

Your team’s value shifts from writing the code to reviewing the AI’s code. They are moving from Authors (typing syntax) to Architects (designing coverage).

Director’s Action:

The “AI Code Review” Workshop: Don’t just teach prompting. Run a session where the AI generates a test script, and the humans have to find the bugs in the test. This reinforces their superiority and their new role as “Auditors.”

Change the Career Ladder: Explicitly add “AI Tool Evaluation” and “Prompt Context Management” to your Senior QA job descriptions. Show them the path forward.

5. The “Vendor Reality Filter”

The Mental Model: Trust but Verify.

As a Director, you are the gatekeeper against Vaporware. Vendors will promise you “Autonomous, Self-Maintaining, Magic Testing.” You need a filter.

The Strategy: When evaluating AI tools, shift the conversation from “What can it do?” to “How does it fail?”

Director’s Action:

Ask the 3 “Killer” Questions:

“Show me the logs when the AI gets it wrong. How easy is it to debug the AI’s hallucination?” (If they can’t show you, run.)

“How do I inject my specific business context (user stories, API docs) into the model?” (If it’s just generic training data, it won’t find deep bugs.)

“What is the cost of a retrain?” (If the UI changes, does the AI heal instantly, or do I need to re-record?)

6. The Governance Layer: The “Kill Switch”

The Mental Model: Fail Fast, Fail Cheap.

Innovation cannot be ad-hoc scope creep. It needs a policy. The biggest risk isn’t that an AI tool fails; it’s that it becomes a “Zombie Tool”—something that provides no value but sucks up maintenance time because no one wants to admit it didn’t work.

Director’s Action:

The “20% Rule”: Formalize this in writing. 20% of sprint capacity is allocated to the “Shadow Mode” experiments. This is non-negotiable capacity, not “nights and weekends” work.

The Kill Switch Policy: Empower your team to kill an AI pilot. If a tool requires more maintenance than the manual work it saves, kill it immediately. There is no shame in a failed experiment.

Final Thought: The “Evaluation Engine”

We don’t need to be futurists to win this year. We just need to be organized.

If you build the Safe Passage framework today—Sandbox, Shadow Mode, Navigator Mindset—it doesn’t matter what tool comes out next month. You’ll be ready to ingest it, test it, and use it, while everyone else is still debating the theory.

Don’t wait for the future. Build the architecture to handle it.